Spatial and realistic audio experience from the cloud

The technical validation and demonstration of new technologies is one of the main tasks of T-Labs. This is often achieved through the use of demonstrators that bring the innovations to life and clearly illustrate the practical application possibilities and benefits of new technologies.

One example is the demonstrator of the new 3GPP voice communication codec “Immersive Voice and Audio Service” (IVAS), which is now available from 5G Release 18. IVAS enables the transmission of spatial audio over mobile networks and is optimized for the highly efficient compression of immersive audio. This enables immersive audio with very low bandwidth, low latency and low computing effort in the end devices.

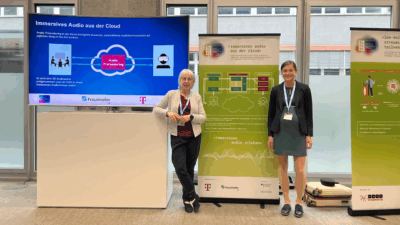

T-Labs project manager Vivien Helmut (left) and Karin Prebeck, project manager at Fraunhofer IIS

As standardization in 3GPP was only recently completed, the codec is not yet supported by the end devices on the market. In order to make the potential of the codec accessible to a broad target group of audio experts, device manufacturers and service providers, T-Labs and the Fraunhofer IIS partners have developed a demonstrator that allows the codec to be experienced in the browser. Playback in the browser means that the demonstration is available on almost any end device.

A visual support of an IVAS audio recording with several speakers in the form of a video conference was developed as a demonstrator, whereby the audio stream is converted into a browser-compatible format via a cloud server. Additional metadata from the audio stream is used to implement visual support in the browser. The movements of the speakers are captured by XR devices or by navigating in the browser and transmitted to the cloud server so that the audio stream is converted in sync with their movements and they experience a spatial, realistic audio experience in this virtual environment.

“To make the advantages of a new codec, especially a spatial audio codec like IVAS, tangible, you have to be able to try it out on as many end devices as possible. With the cloud rendering technology for IVAS developed by FhG IIS and T-Labs, this is now possible on all end devices that support a browser, from PCs to smartphones and tablets to VR glasses,” explains Sven Wischnowsky, Senior Software Engineer at T-Labs.

It was implemented as part of the “Toolkit for Hybrid Events – ToHyVe” project funded by the German Federal Ministry for Economic Affairs and Energy (BMWi). In the project, tools and components for the implementation of hybrid events were developed and integrated into numerous demonstrators (further information at www.tohyve.de).

There is already an initial potential application for the codec: audio recordings encoded with IVAS are ideal for integration into hybrid events, such as physical events, festivals, concerts and conferences that are held in parallel in virtual formats. The metadata contained in the audio recordings can be optimally used for presentation in server-based virtual worlds.

The aim of the demonstrator was achieved in full, as the users of the demo immediately developed a wide range of ideas for future applications. For example, the various audio sources could be visualized by the respective profile picture of the speaker or a customized avatar on the end devices.

The GSMA is currently working on specifications for which features of IVAS should be used for future immersive communication and which functions should be supported by as many end devices as possible to enable this service. It will be interesting to see which applications device manufacturers and service providers plan to integrate into their devices in the coming months.